Reading a File in Java Using Scanner

Reading files in Coffee is the cause for a lot of defoliation. At that place are multiple ways of accomplishing the aforementioned task and it's often not articulate which file reading method is best to use. Something that's quick and dirty for a minor example file might not be the all-time method to utilize when you need to read a very large file. Something that worked in an before Java version, might not be the preferred method anymore.

This article aims to be the definitive guide for reading files in Java vii, eight and 9. I'chiliad going to cover all the means you tin read files in Java. Besides often, you'll read an article that tells you one fashion to read a file, only to observe after at that place are other ways to do that. I'm actually going to cover 15 different ways to read a file in Java. I'thousand going to cover reading files in multiple ways with the core Java libraries too equally two third party libraries.

Just that's not all – what good is knowing how to do something in multiple ways if you lot don't know which way is best for your situation?

I likewise put each of these methods to a real performance test and document the results. That way, y'all will take some hard data to know the performance metrics of each method.

Methodology

JDK Versions

Java code samples don't live in isolation, specially when it comes to Java I/O, equally the API keeps evolving. All lawmaking for this article has been tested on:

- Java SE 7 (jdk1.7.0_80)

- Java SE 8 (jdk1.viii.0_162)

- Coffee SE 9 (jdk-nine.0.4)

When at that place is an incompatibility, it will be stated in that section. Otherwise, the lawmaking works unaltered for unlike Java versions. The primary incompatibility is the utilise of lambda expressions which was introduced in Coffee viii.

Java File Reading Libraries

In that location are multiple means of reading from files in Coffee. This article aims to exist a comprehensive collection of all the different methods. I will cover:

- java.io.FileReader.read()

- java.io.BufferedReader.readLine()

- java.io.FileInputStream.read()

- java.io.BufferedInputStream.read()

- java.nio.file.Files.readAllBytes()

- coffee.nio.file.Files.readAllLines()

- java.nio.file.Files.lines()

- java.util.Scanner.nextLine()

- org.apache.commons.io.FileUtils.readLines() – Apache Commons

- com.google.common.io.Files.readLines() – Google Guava

Closing File Resources

Prior to JDK7, when opening a file in Java, all file resources would need to exist manually closed using a try-catch-finally cake. JDK7 introduced the attempt-with-resource statement, which simplifies the process of endmost streams. You no longer need to write explicit code to close streams because the JVM will automatically close the stream for you, whether an exception occurred or not. All examples used in this commodity use the endeavor-with-resources statement for importing, loading, parsing and closing files.

File Location

All examples will read test files from C:\temp.

Encoding

Character encoding is not explicitly saved with text files then Coffee makes assumptions about the encoding when reading files. Usually, the assumption is correct only sometimes yous want to exist explicit when instructing your programs to read from files. When encoding isn't right, you lot'll see funny characters announced when reading files.

All examples for reading text files use two encoding variations:

Default arrangement encoding where no encoding is specified and explicitly setting the encoding to UTF-8.

Download Code

All code files are available from Github.

Lawmaking Quality and Lawmaking Encapsulation

There is a difference between writing code for your personal or piece of work projection and writing lawmaking to explain and teach concepts.

If I was writing this code for my own projection, I would use proper object-oriented principles like encapsulation, brainchild, polymorphism, etc. Only I wanted to brand each example stand lone and easily understood, which meant that some of the code has been copied from one example to the next. I did this on purpose because I didn't desire the reader to have to effigy out all the encapsulation and object structures I so cleverly created. That would have abroad from the examples.

For the aforementioned reason, I chose NOT to write these case with a unit testing framework similar JUnit or TestNG because that's not the purpose of this article. That would add another library for the reader to sympathise that has zero to exercise with reading files in Java. That'southward why all the example are written inline inside the main method, without actress methods or classes.

My main purpose is to make the examples as easy to empathise as possible and I believe that having actress unit of measurement testing and encapsulation lawmaking will non help with this. That doesn't hateful that'southward how I would encourage you to write your own personal code. It's simply the fashion I chose to write the examples in this article to make them easier to understand.

Exception Handling

All examples declare any checked exceptions in the throwing method declaration.

The purpose of this article is to show all the different ways to read from files in Java – information technology'due south non meant to show how to handle exceptions, which will be very specific to your situation.

And so instead of creating unhelpful effort catch blocks that just print exception stack traces and clutter up the code, all example will declare any checked exception in the calling method. This will make the lawmaking cleaner and easier to understand without sacrificing any functionality.

Futurity Updates

As Java file reading evolves, I will be updating this article with whatsoever required changes.

File Reading Methods

I organized the file reading methods into iii groups:

- Classic I/O classes that take been part of Java since before JDK 1.seven. This includes the java.io and java.util packages.

- New Java I/O classes that have been part of Java since JDK1.7. This covers the java.nio.file.Files class.

- Tertiary party I/O classes from the Apache Commons and Google Guava projects.

Classic I/O – Reading Text

1a) FileReader – Default Encoding

FileReader reads in one character at a fourth dimension, without any buffering. Information technology'due south meant for reading text files. Information technology uses the default character encoding on your organisation, so I take provided examples for both the default case, besides as specifying the encoding explicitly.

1

2

3

4

5

6

7

8

nine

10

11

12

13

xiv

fifteen

16

17

18

xix

import coffee.io.FileReader ;

import coffee.io.IOException ;public class ReadFile_FileReader_Read {

public static void main( Cord [ ] pArgs) throws IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;endeavour ( FileReader fileReader = new FileReader (fileName) ) {

int singleCharInt;

char singleChar;

while ( (singleCharInt = fileReader.read ( ) ) != - 1 ) {

singleChar = ( char ) singleCharInt;//brandish i character at a time

System.out.print (singleChar) ;

}

}

}

}

1b) FileReader – Explicit Encoding (InputStreamReader)

It'south really not possible to prepare the encoding explicitly on a FileReader then yous accept to use the parent course, InputStreamReader and wrap it around a FileInputStream:

1

2

3

4

5

6

7

8

ix

x

11

12

13

14

xv

16

17

18

19

20

21

22

import java.io.FileInputStream ;

import coffee.io.IOException ;

import coffee.io.InputStreamReader ;public class ReadFile_FileReader_Read_Encoding {

public static void main( Cord [ ] pArgs) throws IOException {

Cord fileName = "c:\\temp\\sample-10KB.txt" ;

FileInputStream fileInputStream = new FileInputStream (fileName) ;//specify UTF-8 encoding explicitly

try ( InputStreamReader inputStreamReader =

new InputStreamReader (fileInputStream, "UTF-8" ) ) {int singleCharInt;

char singleChar;

while ( (singleCharInt = inputStreamReader.read ( ) ) != - i ) {

singleChar = ( char ) singleCharInt;

Organization.out.print (singleChar) ; //brandish one character at a time

}

}

}

}

2a) BufferedReader – Default Encoding

BufferedReader reads an entire line at a time, instead of ane character at a time like FileReader. It's meant for reading text files.

ane

2

three

4

5

6

7

8

9

10

11

12

13

14

15

16

17

import coffee.io.BufferedReader ;

import java.io.FileReader ;

import java.io.IOException ;public course ReadFile_BufferedReader_ReadLine {

public static void main( String [ ] args) throws IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

FileReader fileReader = new FileReader (fileName) ;attempt ( BufferedReader bufferedReader = new BufferedReader (fileReader) ) {

String line;

while ( (line = bufferedReader.readLine ( ) ) != null ) {

System.out.println (line) ;

}

}

}

}

2b) BufferedReader – Explicit Encoding

In a like fashion to how we set encoding explicitly for FileReader, nosotros need to create FileInputStream, wrap it within InputStreamReader with an explicit encoding and laissez passer that to BufferedReader:

one

2

3

4

5

vi

7

8

9

x

eleven

12

thirteen

14

fifteen

16

17

18

19

xx

21

22

import java.io.BufferedReader ;

import coffee.io.FileInputStream ;

import java.io.IOException ;

import java.io.InputStreamReader ;public class ReadFile_BufferedReader_ReadLine_Encoding {

public static void principal( Cord [ ] args) throws IOException {

Cord fileName = "c:\\temp\\sample-10KB.txt" ;FileInputStream fileInputStream = new FileInputStream (fileName) ;

//specify UTF-8 encoding explicitly

InputStreamReader inputStreamReader = new InputStreamReader (fileInputStream, "UTF-eight" ) ;try ( BufferedReader bufferedReader = new BufferedReader (inputStreamReader) ) {

Cord line;

while ( (line = bufferedReader.readLine ( ) ) != null ) {

Organization.out.println (line) ;

}

}

}

}

Classic I/O – Reading Bytes

1) FileInputStream

FileInputStream reads in one byte at a fourth dimension, without any buffering. While it'south meant for reading binary files such as images or sound files, information technology tin can notwithstanding be used to read text file. It's like to reading with FileReader in that you're reading 1 grapheme at a time as an integer and you need to cast that int to a char to see the ASCII value.

By default, it uses the default character encoding on your system, so I accept provided examples for both the default case, as well as specifying the encoding explicitly.

1

2

3

4

5

6

vii

viii

9

10

11

12

13

xiv

15

16

17

18

19

20

21

import coffee.io.File ;

import coffee.io.FileInputStream ;

import java.io.FileNotFoundException ;

import coffee.io.IOException ;public class ReadFile_FileInputStream_Read {

public static void primary( Cord [ ] pArgs) throws FileNotFoundException, IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;try ( FileInputStream fileInputStream = new FileInputStream (file) ) {

int singleCharInt;

char singleChar;while ( (singleCharInt = fileInputStream.read ( ) ) != - 1 ) {

singleChar = ( char ) singleCharInt;

System.out.print (singleChar) ;

}

}

}

}

2) BufferedInputStream

BufferedInputStream reads a ready of bytes all at once into an internal byte array buffer. The buffer size can exist set explicitly or utilize the default, which is what we'll demonstrate in our instance. The default buffer size appears to be 8KB but I have not explicitly verified this. All performance tests used the default buffer size and then it volition automatically re-size the buffer when it needs to.

one

ii

3

4

five

6

seven

8

9

ten

11

12

13

xiv

15

16

17

18

19

20

21

22

import coffee.io.BufferedInputStream ;

import java.io.File ;

import java.io.FileInputStream ;

import java.io.FileNotFoundException ;

import java.io.IOException ;public class ReadFile_BufferedInputStream_Read {

public static void principal( String [ ] pArgs) throws FileNotFoundException, IOException {

Cord fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;

FileInputStream fileInputStream = new FileInputStream (file) ;endeavor ( BufferedInputStream bufferedInputStream = new BufferedInputStream (fileInputStream) ) {

int singleCharInt;

char singleChar;

while ( (singleCharInt = bufferedInputStream.read ( ) ) != - ane ) {

singleChar = ( char ) singleCharInt;

System.out.print (singleChar) ;

}

}

}

}

New I/O – Reading Text

1a) Files.readAllLines() – Default Encoding

The Files class is part of the new Java I/O classes introduced in jdk1.7. It only has static utility methods for working with files and directories.

The readAllLines() method that uses the default grapheme encoding was introduced in jdk1.8 and then this example volition not work in Java seven.

ane

2

3

iv

5

six

7

viii

9

ten

11

12

thirteen

fourteen

15

xvi

17

import java.io.File ;

import java.io.IOException ;

import java.nio.file.Files ;

import java.util.List ;public class ReadFile_Files_ReadAllLines {

public static void main( String [ ] pArgs) throws IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;List fileLinesList = Files.readAllLines (file.toPath ( ) ) ;

for ( String line : fileLinesList) {

System.out.println (line) ;

}

}

}

1b) Files.readAllLines() – Explicit Encoding

1

2

3

4

v

6

7

viii

ix

10

xi

12

13

xiv

15

16

17

xviii

19

import java.io.File ;

import java.io.IOException ;

import java.nio.charset.StandardCharsets ;

import java.nio.file.Files ;

import coffee.util.List ;public form ReadFile_Files_ReadAllLines_Encoding {

public static void master( String [ ] pArgs) throws IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;//use UTF-8 encoding

List fileLinesList = Files.readAllLines (file.toPath ( ), StandardCharsets.UTF_8 ) ;for ( String line : fileLinesList) {

System.out.println (line) ;

}

}

}

2a) Files.lines() – Default Encoding

This code was tested to work in Java 8 and ix. Java 7 didn't run because of the lack of support for lambda expressions.

1

2

3

four

5

6

7

eight

9

ten

11

12

13

14

fifteen

16

17

import java.io.File ;

import java.io.IOException ;

import java.nio.file.Files ;

import java.util.stream.Stream ;public class ReadFile_Files_Lines {

public static void main( String [ ] pArgs) throws IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;try (Stream linesStream = Files.lines (file.toPath ( ) ) ) {

linesStream.forEach (line -> {

Organization.out.println (line) ;

} ) ;

}

}

}

2b) Files.lines() – Explicit Encoding

But like in the previous instance, this code was tested and works in Java eight and 9 but not in Java seven.

1

2

3

4

5

6

seven

viii

ix

ten

11

12

13

fourteen

xv

16

17

18

import java.io.File ;

import java.io.IOException ;

import coffee.nio.charset.StandardCharsets ;

import java.nio.file.Files ;

import java.util.stream.Stream ;public class ReadFile_Files_Lines_Encoding {

public static void chief( String [ ] pArgs) throws IOException {

Cord fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;try (Stream linesStream = Files.lines (file.toPath ( ), StandardCharsets.UTF_8 ) ) {

linesStream.forEach (line -> {

Organisation.out.println (line) ;

} ) ;

}

}

}

3a) Scanner – Default Encoding

The Scanner form was introduced in jdk1.vii and can be used to read from files or from the console (user input).

ane

2

iii

iv

5

6

vii

8

nine

10

11

12

13

14

15

16

17

18

19

import java.io.File ;

import java.io.FileNotFoundException ;

import java.util.Scanner ;public class ReadFile_Scanner_NextLine {

public static void main( String [ ] pArgs) throws FileNotFoundException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;try (Scanner scanner = new Scanner(file) ) {

Cord line;

boolean hasNextLine = false ;

while (hasNextLine = scanner.hasNextLine ( ) ) {

line = scanner.nextLine ( ) ;

System.out.println (line) ;

}

}

}

}

3b) Scanner – Explicit Encoding

1

2

3

iv

5

6

7

viii

9

ten

eleven

12

13

xiv

15

xvi

17

18

xix

20

import java.io.File ;

import java.io.FileNotFoundException ;

import coffee.util.Scanner ;public class ReadFile_Scanner_NextLine_Encoding {

public static void master( String [ ] pArgs) throws FileNotFoundException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;//use UTF-eight encoding

effort (Scanner scanner = new Scanner(file, "UTF-eight" ) ) {

String line;

boolean hasNextLine = fake ;

while (hasNextLine = scanner.hasNextLine ( ) ) {

line = scanner.nextLine ( ) ;

Organization.out.println (line) ;

}

}

}

}

New I/O – Reading Bytes

Files.readAllBytes()

Fifty-fifty though the documentation for this method states that "information technology is not intended for reading in big files" I found this to be the absolute best performing file reading method, even on files as large equally 1GB.

ane

2

three

4

5

six

7

viii

9

ten

xi

12

13

14

fifteen

16

17

import java.io.File ;

import java.io.IOException ;

import java.nio.file.Files ;public course ReadFile_Files_ReadAllBytes {

public static void main( String [ ] pArgs) throws IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;byte [ ] fileBytes = Files.readAllBytes (file.toPath ( ) ) ;

char singleChar;

for ( byte b : fileBytes) {

singleChar = ( char ) b;

System.out.print (singleChar) ;

}

}

}

3rd Party I/O – Reading Text

Commons – FileUtils.readLines()

Apache Commons IO is an open source Coffee library that comes with utility classes for reading and writing text and binary files. I listed it in this article because it can exist used instead of the built in Coffee libraries. The class nosotros're using is FileUtils.

For this article, version ii.6 was used which is compatible with JDK 1.7+

Note that yous demand to explicitly specify the encoding and that method for using the default encoding has been deprecated.

1

2

iii

iv

five

6

7

8

nine

10

11

12

xiii

fourteen

15

16

17

18

import coffee.io.File ;

import java.io.IOException ;

import java.util.List ;import org.apache.commons.io.FileUtils ;

public class ReadFile_Commons_FileUtils_ReadLines {

public static void main( String [ ] pArgs) throws IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;List fileLinesList = FileUtils.readLines (file, "UTF-viii" ) ;

for ( String line : fileLinesList) {

Organisation.out.println (line) ;

}

}

}

Guava – Files.readLines()

Google Guava is an open source library that comes with utility classes for common tasks like collections treatment, cache management, IO operations, cord processing.

I listed it in this commodity because it can be used instead of the congenital in Java libraries and I wanted to compare its performance with the Java built in libraries.

For this article, version 23.0 was used.

I'm not going to examine all the different ways to read files with Guava, since this article is not meant for that. For a more detailed look at all the dissimilar ways to read and write files with Guava, take a wait at Baeldung's in depth article.

When reading a file, Guava requires that the grapheme encoding be set explicitly, just like Apache Commons.

Compatibility note: This code was tested successfully on Coffee 8 and nine. I couldn't get it to work on Java 7 and kept getting "Unsupported major.small version 52.0" error. Guava has a separate API doc for Java 7 which uses a slightly different version of the Files.readLine() method. I idea I could go information technology to work but I kept getting that error.

ane

ii

3

four

5

6

7

8

9

10

11

12

xiii

14

xv

16

17

xviii

19

import java.io.File ;

import java.io.IOException ;

import coffee.util.List ;import com.google.mutual.base of operations.Charsets ;

import com.google.common.io.Files ;public class ReadFile_Guava_Files_ReadLines {

public static void main( String [ ] args) throws IOException {

String fileName = "c:\\temp\\sample-10KB.txt" ;

File file = new File (fileName) ;List fileLinesList = Files.readLines (file, Charsets.UTF_8 ) ;

for ( Cord line : fileLinesList) {

System.out.println (line) ;

}

}

}

Performance Testing

Since at that place are so many means to read from a file in Java, a natural question is "What file reading method is the best for my state of affairs?" So I decided to exam each of these methods against each other using sample data files of unlike sizes and timing the results.

Each lawmaking sample from this article displays the contents of the file to a string and then to the console (System.out). Nevertheless, during the functioning tests the Organisation.out line was commented out since it would seriously slow downwardly the performance of each method.

Each functioning test measures the time information technology takes to read in the file – line past line, graphic symbol by graphic symbol, or byte by byte without displaying anything to the panel. I ran each test five-ten times and took the average so as non to let any outliers influence each test. I also ran the default encoding version of each file reading method – i.eastward. I didn't specify the encoding explicitly.

Dev Setup

The dev environment used for these tests:

- Intel Core i7-3615 QM @2.iii GHz, 8GB RAM

- Windows 8 x64

- Eclipse IDE for Java Developers, Oxygen.2 Release (four.7.2)

- Java SE 9 (jdk-9.0.four)

Data Files

GitHub doesn't allow pushing files larger than 100 MB, and then I couldn't find a practical manner to store my large test files to allow others to replicate my tests. So instead of storing them, I'm providing the tools I used to generate them and so you lot tin create test files that are similar in size to mine. Obviously they won't be the same, only you'll generate files that are like in size as I used in my operation tests.

Random String Generator was used to generate sample text then I simply re-create-pasted to create larger versions of the file. When the file started getting too large to manage inside a text editor, I had to use the command line to merge multiple text files into a larger text file:

copy *.txt sample-1GB.txt

I created the following 7 data file sizes to test each file reading method across a range of file sizes:

- 1KB

- 10KB

- 100KB

- 1MB

- 10MB

- 100MB

- 1GB

Performance Summary

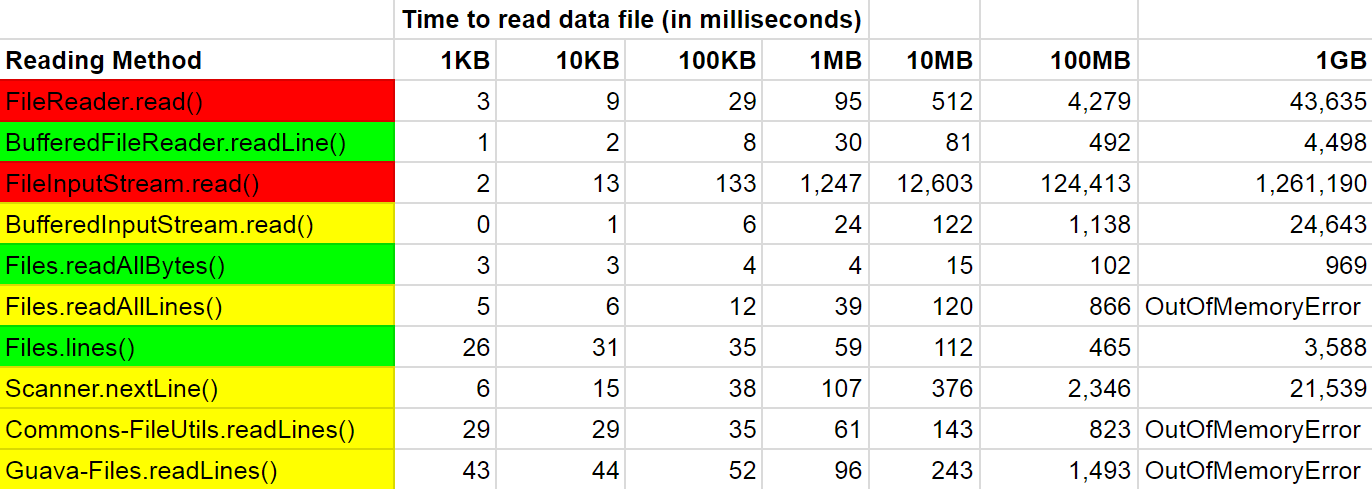

There were some surprises and some expected results from the operation tests.

As expected, the worst performers were the methods that read in a file character by graphic symbol or byte past byte. But what surprised me was that the native Java IO libraries outperformed both 3rd party libraries – Apache Commons IO and Google Guava.

What's more than – both Google Guava and Apache Commons IO threw a java.lang.OutOfMemoryError when trying to read in the ane GB test file. This too happened with the Files.readAllLines(Path) method only the remaining 7 methods were able to read in all test files, including the 1GB test file.

The following table summarizes the boilerplate time (in milliseconds) each file reading method took to complete. I highlighted the superlative three methods in green, the average performing methods in yellow and the worst performing methods in red:

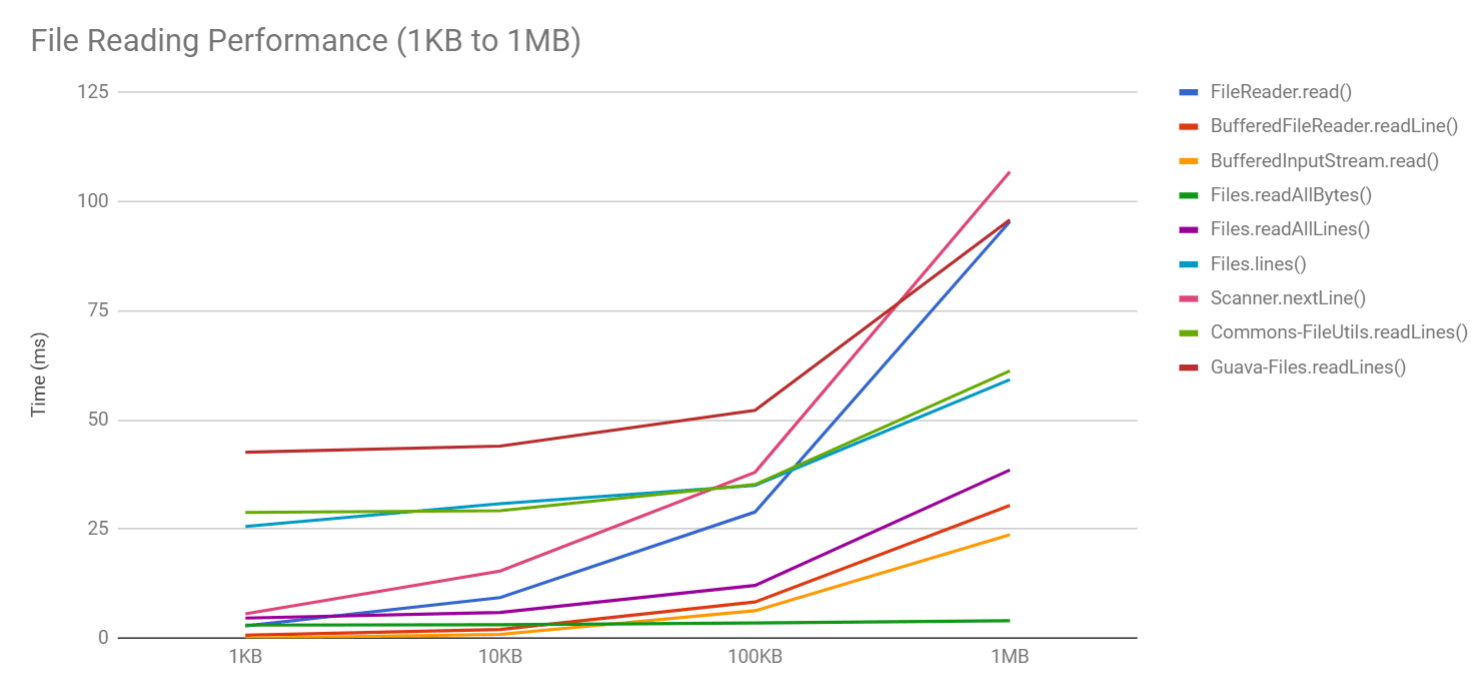

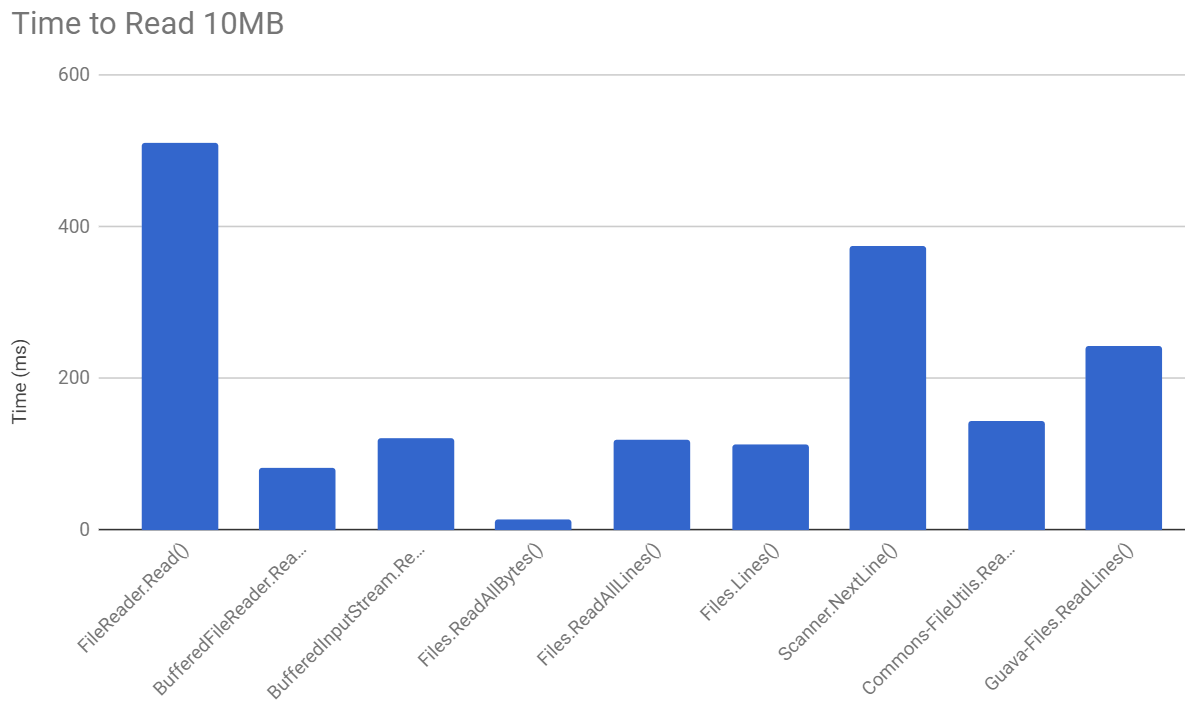

The following nautical chart summarizes the above table but with the following changes:

I removed java.io.FileInputStream.read() from the chart considering its functioning was so bad it would skew the entire chart and you wouldn't come across the other lines properly

I summarized the data from 1KB to 1MB considering after that, the chart would become besides skewed with so many under performers and also some methods threw a java.lang.OutOfMemoryError at 1GB

The Winners

The new Java I/O libraries (java.nio) had the best overall winner (coffee.nio.Files.readAllBytes()) but it was followed closely behind past BufferedReader.readLine() which was as well a proven top performer beyond the lath. The other excellent performer was java.nio.Files.lines(Path) which had slightly worse numbers for smaller examination files but really excelled with the larger test files.

The absolute fastest file reader across all data tests was java.nio.Files.readAllBytes(Path). Information technology was consistently the fastest and even reading a 1GB file only took virtually i second.

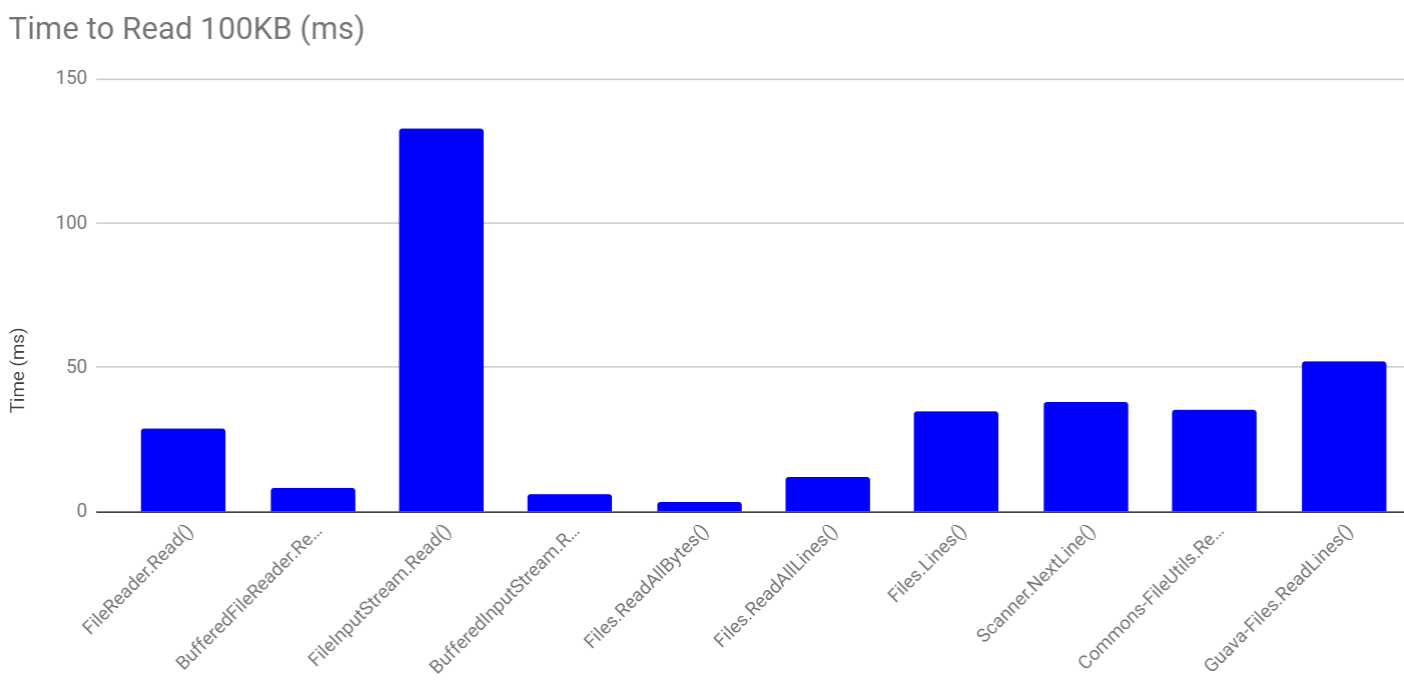

The following chart compares performance for a 100KB test file:

You can see that the lowest times were for Files.readAllBytes(), BufferedInputStream.read() and BufferedReader.readLine().

The post-obit chart compares functioning for reading a 10MB file. I didn't carp including the bar for FileInputStream.Read() because the performance was then bad it would skew the unabridged chart and yous couldn't tell how the other methods performed relative to each other:

Files.readAllBytes() really outperforms all other methods and BufferedReader.readLine() is a afar second.

The Losers

As expected, the absolute worst performer was java.io.FileInputStream.read() which was orders of magnitude slower than its rivals for nearly tests. FileReader.read() was also a poor performer for the same reason – reading files byte past byte (or character by graphic symbol) instead of with buffers drastically degrades performance.

Both the Apache Commons IO FileUtils.readLines() and Guava Files.readLines() crashed with an OutOfMemoryError when trying to read the 1GB examination file and they were about average in operation for the remaining exam files.

java.nio.Files.readAllLines() also crashed when trying to read the 1GB test file just it performed quite well for smaller file sizes.

Operation Rankings

Hither's a ranked list of how well each file reading method did, in terms of speed and handling of large files, likewise as compatibility with different Java versions.

| Rank | File Reading Method |

|---|---|

| one | java.nio.file.Files.readAllBytes() |

| 2 | java.io.BufferedFileReader.readLine() |

| 3 | java.nio.file.Files.lines() |

| four | java.io.BufferedInputStream.read() |

| 5 | java.util.Scanner.nextLine() |

| 6 | java.nio.file.Files.readAllLines() |

| vii | org.apache.commons.io.FileUtils.readLines() |

| eight | com.google.common.io.Files.readLines() |

| 9 | java.io.FileReader.read() |

| 10 | java.io.FileInputStream.Read() |

Conclusion

I tried to nowadays a comprehensive set up of methods for reading files in Java, both text and binary. We looked at xv different means of reading files in Java and nosotros ran performance tests to see which methods are the fastest.

The new Java IO library (java.nio) proved to be a great performer merely and then was the classic BufferedReader.

Source: https://funnelgarden.com/java_read_file/

0 Response to "Reading a File in Java Using Scanner"

Post a Comment